|

Currently I am a Motion Capture Engineer at Mihoyo. Before I was a Graphic Engineer at NExT Studio where we are working to build photorealistic face model. I was writing my master thesis at GVV group, MPI-INF, jointly supervised by Prof. Christian Theobalt and Prof. Bastian Leibe. I was a master student at RWTH Aachen, where I studied on computer vision, computer graphics and machine learning. I received my B.E. degree from Beijing Institute of Technology in 2017. I'm interested in computer vision, computer graphics and the applications like AR/VR which combine both. |

|

|

|

|

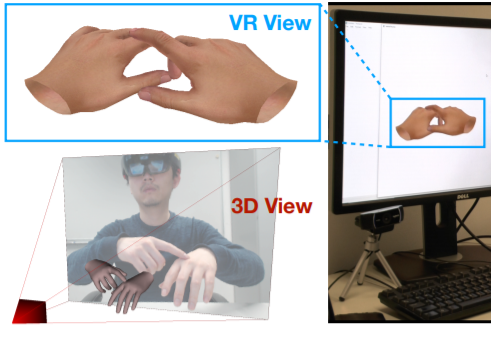

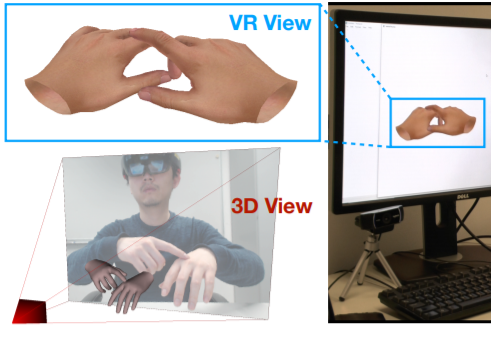

Jiayi Wang, Franziska Müller, Florian Bernard, Suzanne Sorli, Oleksandr Sotnychenko, Neng Qian, Miguel A. Otaduy, Dan Casas, Christian Theobalt SIGGRAPHAsia, 2020 Project Page / Tracking and reconstructing the 3D pose and geometry of two hands in interaction is a challenging problem that has a high relevance for several human-computer interaction applications, including AR/VR, robotics, or sign language recognition. Existing works are either limited to simpler tracking settings (e.g., considering only a single hand or two spatially separated hands), or rely on less ubiquitous sensors, such as depth cameras. In contrast, in this work we present the first real-time method for motion capture of skeletal pose and 3D surface geometry of hands from a single RGB camera that explicitly considers close interactions. In order to address the inherent depth ambiguities in RGB data, we propose a novel multi-task CNN that regresses multiple complementary pieces of information, including segmentation, dense matchings to a 3D hand model, and 2D keypoint positions, together with newly proposed intra-hand relative depth and inter-hand distance maps. These predictions are subsequently used in a generative model fitting framework in order to estimate pose and shape parameters of a 3D hand model for both hands. We experimentally verify the individual components of our RGB two-hand tracking and 3D reconstruction pipeline through an extensive ablation study. Moreover, we demonstrate that our approach offers previously unseen two-hand tracking performance from RGB, and quantitatively and qualitatively outperforms existing RGB-based methods that were not explicitly designed for two-hand interactions. Moreover, our method even performs on-par with depth-based real-time methods. |

|

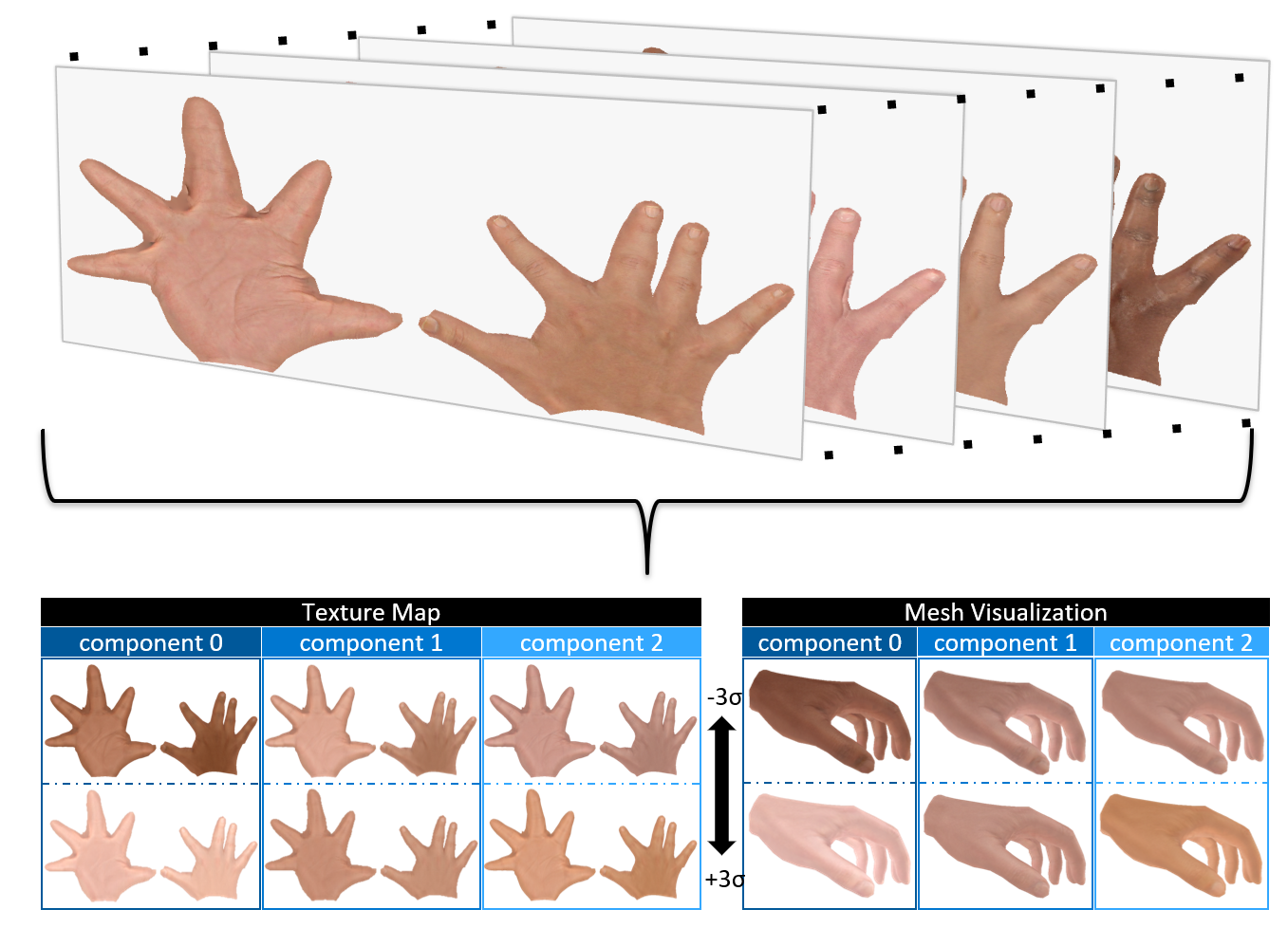

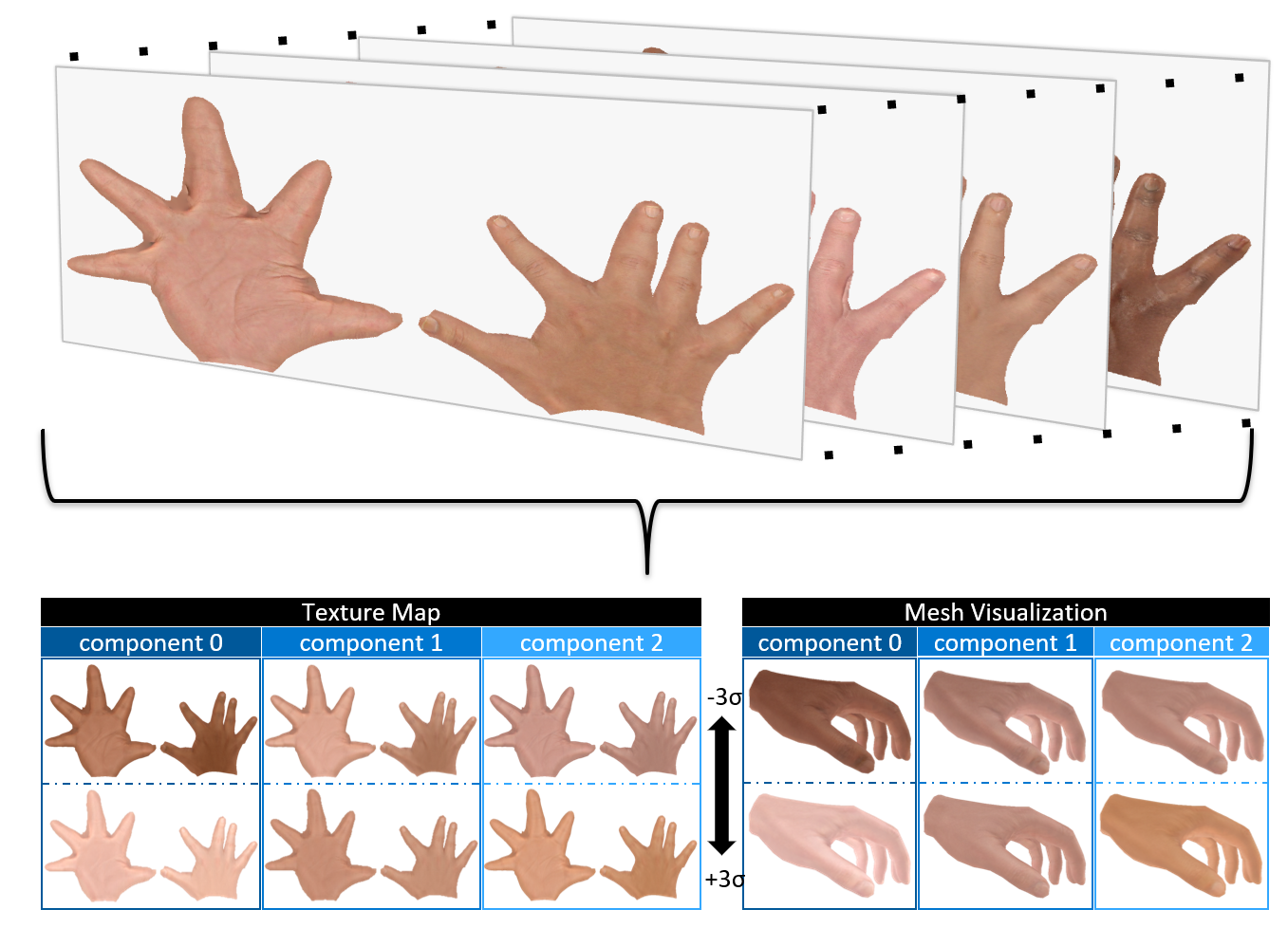

Neng Qian, Jiayi Wang, Franziska Müller, Florian Bernard, Vladislav Golyanik, Christian Theobalt ECCV, 2020 Project Page / Paper We build the first parametric texture model of human hands. The model is registered to the popular MANO hand model. Furthermore, our model can be used to define a neural rendering layer that enables training with a self-upervised photometric loss. We make our model publicly available. |

|

|

|

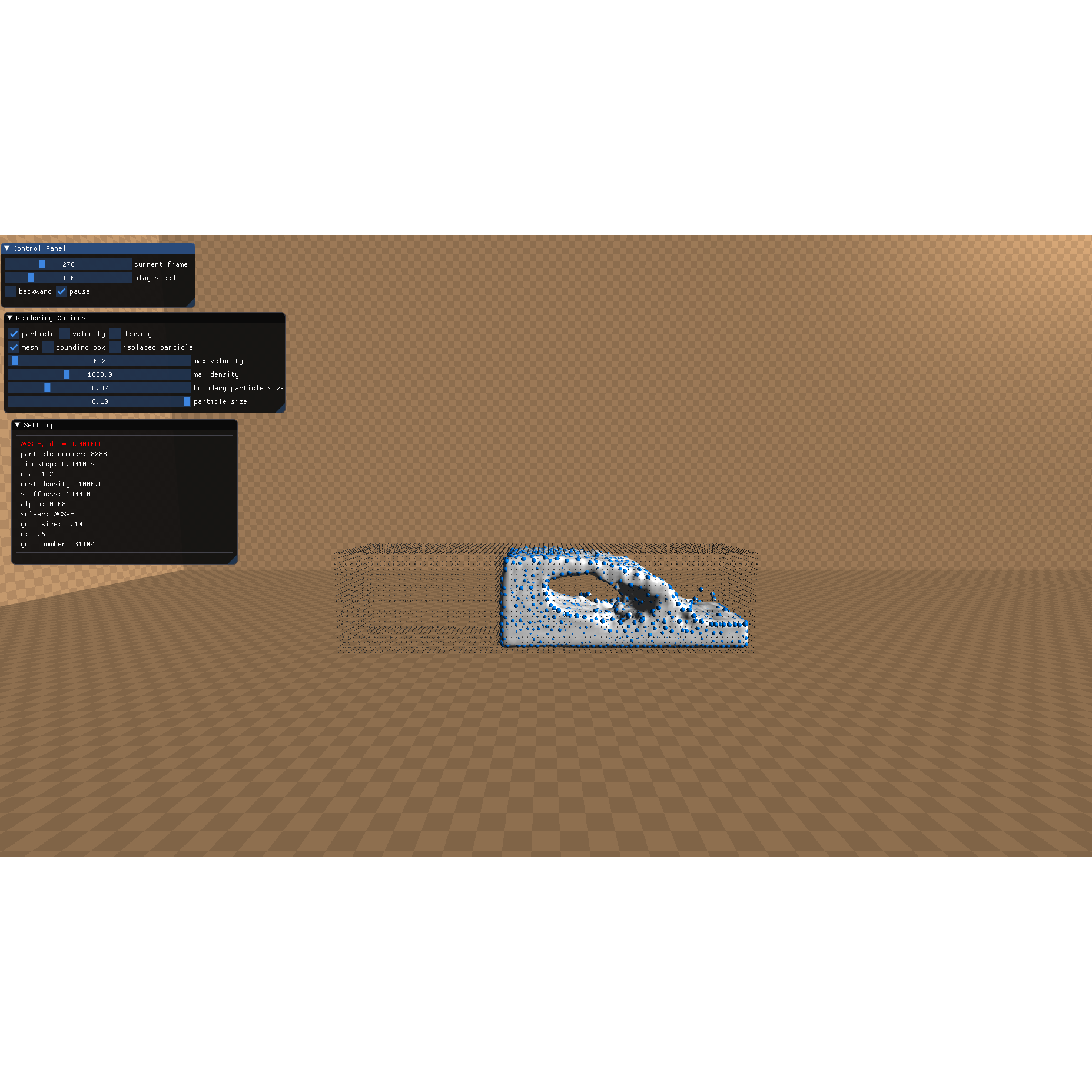

Neng Qian, Chui Chao Code This project is for the fluid simulation lab course in 2018 SS at RWTH. It includes two particle-based fluid simulation solvers (PBF and WCSPH), and a visualization program based on Merely3D and imgui. It applys Marching cubes to reconstruct fluid surface. |

|

An Orc warrior in World of Warcraft. Lok Tar Ogar! |

|

Thanks to Jon Barron for the awesome website template. |